Unlocking AI's Future: Evolution and Challenges

AD

Table of Contents

- Introduction

- Understanding Deep Learning Systems

- Evolution of Deep Learning

- Challenges in Deep Learning Systems

- Role of Frameworks

- Hardware Architecture for Deep Learning

- Traditional x86 Architecture

- Graphics Processing Units (GPUs)

- Dedicated Accelerators

- Deep Learning at the Edge

- Constraints and Considerations

- Specialized IP for Edge Devices

- Evolution of AI Frameworks

- From Caffe to TensorFlow and PyTorch

- Flexibility and Speed in Frameworks

- Deep Learning Compilers: The Future

- Challenges and Solutions

- Role of Deep Learning Compilers

- Democratization of AI Development

- Python and JavaScript Developers

- Frameworks' Accessibility

- Future Trends and Research

- Complex Workloads in AI

- Importance of General-Purpose Hardware

- Debating the Singularity

- Understanding AGI

- Challenges and Skepticism

- Conclusion

Introduction

Hey everyone! Thank you for joining us today for our special live stream with Intel. I'm so excited about the discussion today. Joining me is Andres Rodriguez, a senior principal engineer at Intel. Andres, it's great to have you here!

Understanding Deep Learning Systems

Evolution of Deep Learning

Every three to four months, the number of computations required to train and deploy state-of-the-art models doubles. This exponential growth presents significant challenges.

Challenges in Deep Learning Systems

As deep learning transitions to industry, the complexity of problems it tackles grows, necessitating a holistic approach to system design.

Role of Frameworks

Frameworks like TensorFlow and PyTorch have evolved significantly over the past decade, offering both speed and flexibility in deploying deep learning models.

Hardware Architecture for Deep Learning

Traditional x86 Architecture

While traditional x86 architecture is versatile, the demand for deep learning computations has led to the adoption of GPUs and dedicated accelerators.

Graphics Processing Units (GPUs)

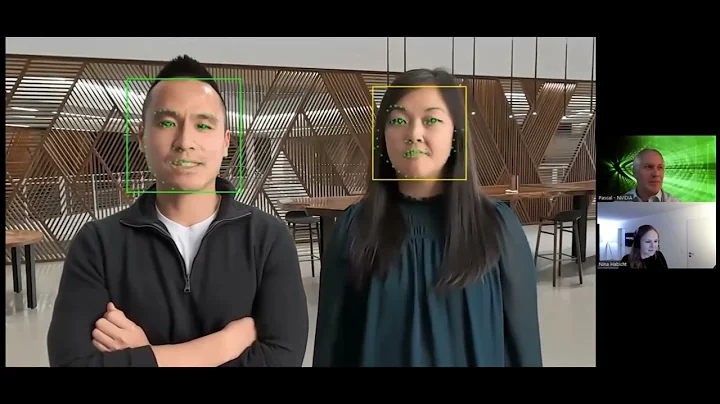

GPUs offer high compute power, and recent advancements like NVIDIA's Tensor Cores have further enhanced their performance.

Dedicated Accelerators

Various startups and established companies are developing dedicated accelerators tailored for deep learning tasks, enhancing both training and inference.

Deep Learning at the Edge

Constraints and Considerations

Edge devices face constraints such as limited memory and power, necessitating specialized accelerators for efficient deep learning inference.

Specialized IP for Edge Devices

To address these constraints, edge devices incorporate specialized IP for deep learning serving, enabling real-time inference without compromising performance.

Evolution of AI Frameworks

From Caffe to TensorFlow and PyTorch

The evolution of AI frameworks has democratized the development of deep learning models, enabling even Python and JavaScript developers to leverage their capabilities.

Flexibility and Speed in Frameworks

Modern frameworks like TensorFlow and PyTorch offer both speed and flexibility, catering to a diverse range of deep learning applications.

Deep Learning Compilers: The Future

Challenges and Solutions

As hardware diversity grows, the development of deep learning compilers becomes crucial for efficiently mapping computations to various hardware backends.

Role of Deep Learning Compilers

Deep learning compilers automate the mapping of operations to hardware backends, ensuring optimal performance across diverse hardware architectures.

Democratization of AI Development

Python and JavaScript Developers

The accessibility of AI frameworks allows developers with Python and JavaScript backgrounds to quickly learn and apply deep learning techniques.

Frameworks' Accessibility

Frameworks like TensorFlow and PyTorch abstract away complexities, empowering developers to focus on model development rather than low-level implementation details.

Future Trends and Research

Complex Workloads in AI

As AI workloads become more complex, the demand for general-purpose hardware capable of supporting diverse operations grows.

Importance of General-Purpose Hardware

General-purpose hardware becomes essential for accommodating the diverse range of deep learning workloads and ensuring scalability and efficiency.

Debating the Singularity

Understanding AGI

While some speculate about the advent of artificial general intelligence (AGI), significant challenges remain in understanding and replicating human-like consciousness and self-awareness.

Challenges and Skepticism

The path to AGI is uncertain, with many unanswered questions surrounding the mathematics of consciousness. Skepticism persists amid ongoing debates in academic circles.

Conclusion

In conclusion, the field of deep learning systems continues to evolve rapidly, driven by advancements in hardware, frameworks, and research. While challenges remain, the democratization of AI development and ongoing innovations offer promising opportunities for addressing complex real-world problems.

Highlights

- The exponential growth of computations in deep learning presents challenges in hardware architecture and system design.

- Frameworks like TensorFlow and PyTorch democratize AI development, enabling even Python and JavaScript developers to leverage deep learning capabilities.

- Deep learning compilers are poised to play a crucial role in optimizing computations for diverse hardware architectures, ensuring scalability and efficiency.

- Skepticism persists regarding the concept of the singularity, highlighting the need for further research and understanding in the field of artificial general intelligence (AGI).

FAQ

Q: What are some challenges in deep learning systems?

A: Deep learning systems face challenges such as exponential growth in computations, hardware limitations, and the need for efficient system design to accommodate complex workloads.

Q: How accessible are AI frameworks to developers with non-AI backgrounds?

A: Modern AI frameworks like TensorFlow and PyTorch abstract away complexities, making them accessible to developers with Python and JavaScript backgrounds, enabling rapid learning and application of deep learning techniques.

Q: What role do deep learning compilers play in system optimization?

A: Deep learning compilers automate the mapping of operations to hardware backends, ensuring optimal performance across diverse architectures, thus facilitating efficient system optimization.

Q: Is the concept of the singularity widely accepted in the AI community?

A: The concept of the singularity, referring to the advent of artificial general intelligence (AGI), remains a topic of debate in the AI community, with skepticism prevailing amid unresolved questions surrounding consciousness and self-awareness.